Strategies for linking to obsolete websites

I've been blogging for a long time. Over the years, I've linked to tens of thousands of websites. Inevitably, some of those sites have gone. Even when sites still exist, webmasters seem to have forgotten that Cool URls Don't Change.

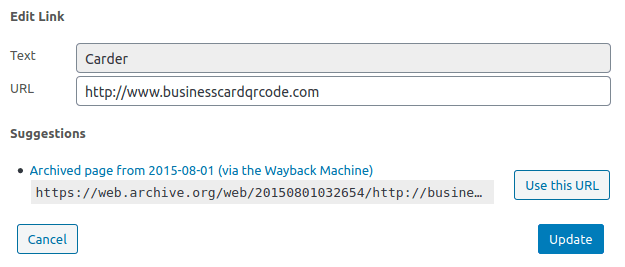

I use the WordPress Broken Link Checker plugin. It periodically monitors the links on my site and lets me know which ones are dead. It also offers to link to Wayback Machine snapshots of the page.

It doesn't always work, of course. Sometimes the page will have been taken over by spammers, and the snapshot reflects that.

This isn't some SEO gambit. I believe that the web works best when users can seamlessly surf between sites. Forcing them to search for information is user-hostile.

What I'm trying to achieve

When a visitor clicks on a link, they should get (in order of preference):

- The original page

- An archive.org view of the page

- Ideally the most recent snapshot

- If the recent snapshot doesn't contain the correct content, a snapshot of the page around the time the link was made

- A snapshot of the site's homepage around the time the link was made

- A replacement page. For example, Topsy used to show who had Tweeted about your page. Apple killed Topsy - so now I point to Twitter's search results for a URl.

- If there is no archive, and no replacement, and the link contains useful semantic information - leave it broken.

- Remove the link.

Some links are from people leaving comments, and setting their comments. Is it useful for future web historians to know that Blogger Profile 1234 commented on my blog and your blog?

Some links are only temporarily dead (for tax reasons?) - so I tend to leave them broken.

The Internet Archive say that "If you see something, save something". So, going forward, I'll submit every link out from my blog to the Archive. I'm hoping to find a plugin to automate that - any ideas?

Because I'm currently in a redevelopment phase here, moving code between domains and services, I can see from my Apache logs that there are many 404s being sent out as pages — temporarily — move around.I've also got an issue to solve in that whilst most of my blogging over the years has used a permalink of site-date-title for a while I used site-title-date. I'm sure I had a good reason at the time, but I can't recall it now. Edent has some ideas on how to deal with one's own outbound dead links though. Worth reviewing.

No idea where you’d find a plugin but bear in mind that not all domains allow access to the IA crawler. I recall it being quite aggressive a few years back so blocked their crawlers. On big sites they’d take quite a bit of bandwidth for negligee return. No idea on the numbers either.

I do like the idea of grabbing the content of what you originally referenced though, especially if context is lost or irrelevant through missing page or revamped domain.

You might struggle to match up old IA content where crawling was sparse or a page doesn’t exist, especially if a spammer has control of the domain and of course not all new domain owners are spammers either. How are you going to decide if a page returning a 200 ( if it’s a link to root) is the original domain content or new content where no IA exists?

Would you have a list of bad words? Pr0n etc Would you check for a google cache? Would you parse the page title and see if the content ranks for the string?

All of those would potentially help indicate that the page is at least worthy in some regard or not and based upon the response you could make a decision to retain the link or push them to your custom gone page or other page created with the IA scrape.

Sounds fun either way.

I like that with Jeremy Keith auto saves any link from his site to archive.org. So even if the link itself is broken, it should be possible to find it there.I like the idea of the site itself trying to fix it.For some time I had my site fail to build if any broken links were found, but as I interact with more sites, and push more content daily, it's a bit difficult to do that.

Haha, I was thinking the same thing recently! I’ve been running my site and its links through Web Archive each week for the past month, so there’s archived versions.

I recently started checking all my old posts manually. Turns out only a handful of links still work. The thing that really annoyed me tho is when a domain changed ownership and displays wildly different content eventually. Some even with disreputable content. So yeah, some “link hygiene” on occasion is probably a good idea.

Yes, the only URLs I can be confident about in my blog are the ones I control. They all still work...

It's not just little fly-by-night companies that go away, either - I used to have links to quite a few clips on Google Video - remember that? I had to go away and try and find them on YouTube and re-link...

Lots of nice ideas there, though, thanks - gonna try that plugin...