Compressing Text into Images

(This is, I think, a silly idea. But sometimes the silliest things lead to unexpected results.)

The text of Shakespeare's Romeo and Juliet is about 146,000 characters long. Thanks to the English language, each character can be represented by a single byte. So a plain Unicode text file of the play is about 142KB.

In Adventures With Compression, JamesG discusses a competition to compress text and poses an interesting thought:

Encoding the text as an image and compressing the image. I would need to use a lossless image compressor, and using RGB would increase the number of values associated with each word. Perhaps if I changed the image to greyscale? Or perhaps that is not worth exploring.

Image compression algorithms are, generally, pretty good at finding patterns in images and squashing them down. So if we convert text to an image, will image compression help?

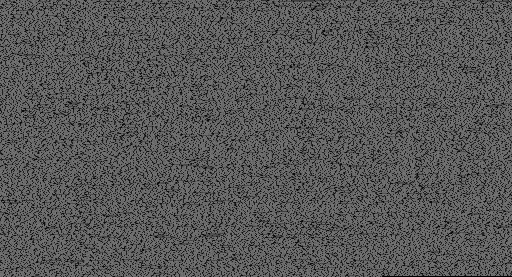

The English language and its punctuation are not very complicated, so the play only contains 77 unique symbols. The ASCII value of each character spans from 0 - 127. So let's create a greyscale image which each pixel has the same greyness as the ASCII value of the character.

Here's what it looks like when losslessly compressed to a PNG:

That's down to 55KB! About 40% of the size of the original file. It is slightly smaller than ZIP, and about 9 bytes larger than Brotli compression.

The file can be read with the following Python:

from PIL import Image

image = Image.open("ascii_grey.png")

pixels = list(image.getdata())

ascii = "".join([chr(pixel) for pixel in pixels])

with open("rj.txt", "w") as file:

file.write(ascii)But, even with the latest image compression algorithms, it is unlikely to compress much further; the image looks like random noise. Yes, you and I know there is data in there. And a statistician looking for entropy would probably determine that the file contains readable data. But image compressors work in a different realm. They look for solid blocks, or predictable gradients, or other statistical features.

But there you go! A lossless image is a pretty efficient way to compress ASCII text.

@Edent kinda like digital microfiche, lol.

@Edent You could probably reduce it further by tokenising the 50 most common words down to greyscale values, maybe 45 or so as you'd then need further values as control codes to embed those tokenisations at the start of the image (or cheat, and do that out of band). I briefly made a start on trying that and then realised I have several more useful things to do today than implement a janky compression algorithm.

I was thinking you could do it with 28 "gylphs/things". 26 for the normal a-z and 2 more for a toggle between caps and to toggle "extras" (such as to 10 for numerals and x for space, comma, full stop, dash, colon, semicolon, quotemark - that's 17 - so still some spare for other punctuation). So over half a byte per entry - so you could fit 4 "things" into 3 bytes.... I don't want to write something to test this but...

Has amybody tried lossless webp? (There's lossy webp and lossless webp, make sure you try the lossless one)

something like this:

ffmpeg -i image.png -lossless 1 -compression_level 6 -quality 100 out.webp

Note: webp has a max res of 16383 pixels either way, so you might be limited by that.

Try it and let us know the results!

I was going to say that PNG compression is just DEFLATE and so should work approximately as well as ZIP (or gzip), but it turns out that PNG images can also be pre-filtered by subtracting neighbouring pixels (for various, dynamically chosen values of "neighbour"). As you say, ASCII text isn't a good match for that, unless the text is screaming AAAAAAAAAAAAAAAA at you, so that leaves just the DEFLATE compression.

@Edent

You could maybe get it under 100 KB by rewriting it in the Shavian alphabet.

Compressing Text into Images | Hacker News

It's not much but you can shave it off from 55248 to 55224 using this:

#!/bin/sh

for i in *.png; do

if [[ -f "$i" ]]; then

exiftool -png:all= "$i"

optipng -o7 "$i"

zopflipng -y -m --filters=p "$i" "$i"

fi

done

juliet: wherefore art thou, romeo?

romeo: [typing intensifies]

More comments on Mastodon.