The ePub format is the cross-platform way to package an eBook. At its heart, an ePub is just a bundled webpage with extra metadata - that makes it extremely easy to build workflows to create them and apps to read them. Once you've finished authoring your ePub, you've got a folder full of HTML, CSS, metadata documents, and other resources. The result is then stored in a standard Zip file and is…

Continue reading →

(This is, I think, a silly idea. But sometimes the silliest things lead to unexpected results.) The text of Shakespeare's Romeo and Juliet is about 146,000 characters long. Thanks to the English language, each character can be represented by a single byte. So a plain Unicode text file of the play is about 142KB. In Adventures With Compression, JamesG discusses a competition to compress text…

Continue reading →

There are lots of new image compression formats out there. They excel at taking large, complex pictures and algorithmically reducing them to smaller file sizes. All of the comparisons I've seen show how good they are at squashing down big files. I wanted to go the other way. How good are modern codecs at dealing with tiny files? Using GIMP, I created an image which was a single white pixel,…

Continue reading →

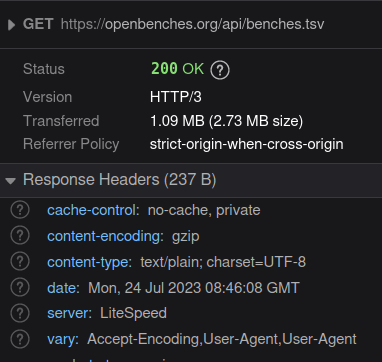

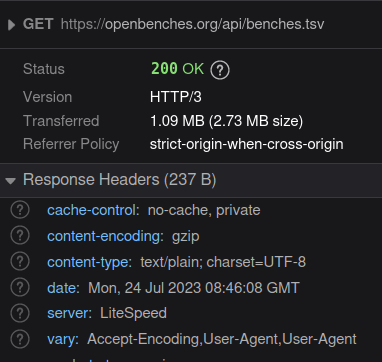

Perhaps this was obvious to you, but it wasn't to me. So I'm sharing in the hope that you don't spend an evening trying to trick your webserver into doing something stupid. For years, HTTP content has been served with gzip compression (gz). It's basically the same sort of compression algorithm you get in a .zip file. It's pretty good! But there's a new(er) compression algorithm called Brotli…

Continue reading →

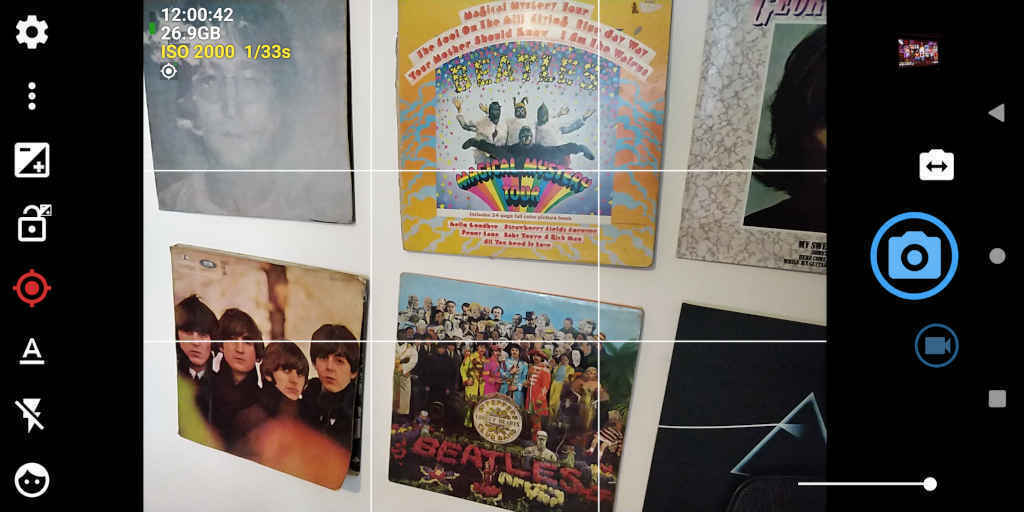

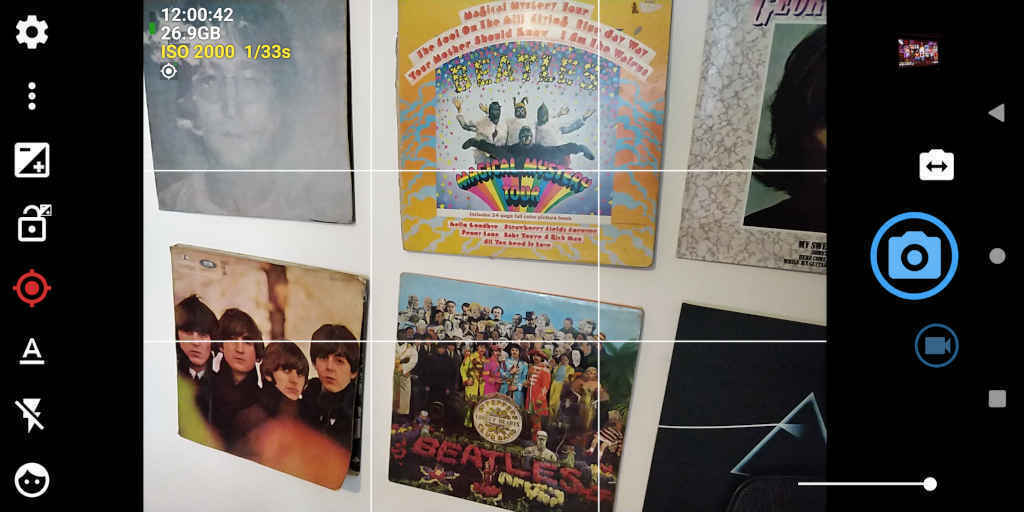

I have a screenshot of my phone's screen. It shows an app's user interface and a photo in the middle. Something like this: If I set the compression to be lossy - the photo looks good but the UI looks bad. If I set the compression to be lossless - the UI looks good but the filesize is huge. Is there a way to selectively compress different parts of an image? I know WebP and AVIF are pretty…

Continue reading →

How efficient are modern codecs? Can we ever work out whether the power use of compression algorithms is a net gain for global power consumption? Come on a thought experiment with me. I have invented a new image compression format. It shrinks images to 50% smaller sizes than AVIF and is completely lossless. Brilliant! There's only one problem - it is 1 million times slower. If it takes your…

Continue reading →