UK Flood Forecast on Amazon Alexa

You can now get the UK's flood forecast as part of your Flash Briefing on Amazon's Alexa.

I've tried previously to create an interactive Alexa skill - it did not go well. This time, I thought I'd create an easier skill - a Flash Briefing. You ask Alexa for your daily news report and it reads out items that you've configured - news, weather, traffic, that sort of thing.

For a basic skill, all you need is a tiny scrap of JSON. In this case:

{

"uid": "https://example.com/flood/index.php",

"updateDate": "2017-07-02T09:30:00Z",

"titleText": "UK Flood Forecast",

"mainText": "The overall flood risk is VERY LOW for the next five days. Minor surface water flooding impacts are possible, but not expected, on Thursday.",

"redirectionUrl": "https://flood-warning-information.service.gov.uk/"

}

If you can dynamically generate something like that, and host it on a website, you can make a skill.

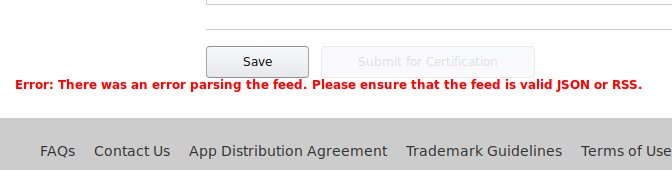

Following the Amazon process is slightly tedious - especially when it gives you errors like this.

Pro Tip - tell your users why and where something has failed. They can't be expected to magically guess what the problem is.

You can beta test a skill before it goes live. The submission and review process was painless - took about 12 hours on a weekend.

Voice Interfaces For Government

(My thoughts - not my employer's)

This is an unofficial service that I've built using the Open Data produced by my friends at the environment agency.

In an ideal world, you wouldn't need to create specific skills like this. Nor would you have to create a specially formatted file for every voice assistant out there. Websites would be full of semantic markup and intelligent assistants would be easily able to work out when the council offices were open, which bus routes were delayed, and what the flood risk was.

We're not there yet.

There is a huge industry push towards voice interfaces. Is it incumbent on Government to create services like this, or is it enough to create APIs and let others build things?

There's a long-standing moratorium on the UK Government creating mobile apps - we can't waste money developing for a dozen different platforms, keeping them updated, reacting to changing requirements from store owners.

Is the same true for voice interfaces? Siri, Cortana, Alexa, whatever Google's one is called, Bixby, and a dozen more round the corner. Each needing a different configuration to get them to vocalise your news. Each needing you to sign up to yet-another developer agreement.

My job is looking at open standards. We're too early in this industry to converge on one-standard-to-rule-them all. But if Voice is The Future™, we all need to think about how we make it as easy to deploy interactive speech services as possible.

Voice feels like it could be something - but only if it is open to all.

You can install the UK's flood forecast on Amazon's Alexa.

What links here from around this blog?