Bugs in Twitter Text Libraries

The Twitter Engineering Team have a set of text processing classes which are meant to simplify and standardise the recognition of URLs, screen names, and hashtags. Dabr makes use of them to keep in conformance with Twitter's style.

One of the advantages of the text processing is that it will recognise that www.example.com is a URL and automatically create a hyperlink. Considering that dropping the "http://" represents 5% saving on Twitter's 140 character limit for messages, this is great.

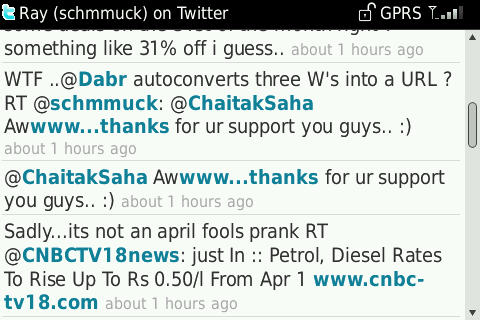

So, I was mightily surprised to get this bug report from user "schmmuck"

Dabr rendering error

How very odd... This is how it looks on m.twitter.com.

m.twitter rendering error

Twitter also use mobile.twitter.com for smartphones. Here's how that site renders the text.

mobile.twitter rendering error

Finally, let's take a look at the "canonical" rendering at Twitter.com

Twitter rendering error

The Problem(s)

The first issue is inconsistency. Twitter ought to be using the same regex for each of its sites. It doesn't. This means that different developers will get divergent experiences. This leads to confusion, which leads to fear, which, as we all know, leads to anger.... and so forth.

Secondly, and more importantly, parsing is hard. There are so many edge cases that errors inevitably creep in. My post about hashtags explains the problems in defining what should be recognised.

So, based on what we've seen, should Twitter recognise any of the following as URLs?

news.bbc.co.uk - no www there.

invalid.name - a silly URL, but a valid one.

खोज.com - International domains contain more than just ASCII

All the above are valid - yet they're not recognised by Twitter.

A (Simple) Solution?

There is a canonical list of TLDs which is also available as a plain text list.

Any string containing a "." followed by a valid TLD, then followed by a space or "/" should be treated as a URL.

Your thoughts?

I'm not convinced that adding handling for all TLDs is worth the few extra characters saved.

But you're both definitely right about consistency, that's just sloppy of them!

There is also the problem with clashes such as "Hi john.in tomorrow?" - is that http://www.john.in? I think not. It's those kinds of issues which I believe are not worth the effort of solving for the sake of 7 characters.

Steven Pears says:

If your TLD list is out of date then you accept you may not catch 100%, but you're going to get a much more accurate set of results compared to regex alone, and in the long run people will appreciate it.

It turns out to be a harder problem that expected. The http://www...foo bug was introduced while adding support for some IDNs. I'll investigate the TLD stuff but the main worry is the gTLD process and what that will mean for the list of valid TLDs.