This post will show you how to programmatically get the cheapest possible price on eBooks from Kobo.

Background

Amazon have decided to stop letting customers download their purchased eBooks onto their computers. That means I can't strip the DRM and read on my non-Amazon eReader.

So I guess I'm not spending money with Amazon any more. I'm moving to Kobo for three main reasons:

- They provide standard ePubs for download.

- ePub DRM is trivial to remove.

- Kobo will undercut Amazon's prices!

Here's the thing. I want to buy my eBooks. It is trivial to pirate almost any modern book. But, call me crazy, I like rewarding writers with a few pennies. That said, I'm not made of money, so I want to get the best (legal) deal possible.

Kobo do a price-match with other eBook retailers. It says:

We'll award a credit to your Kobo account equal to the price difference, plus 10% of the competitor’s price.

I found a book I wanted which was £4.99 on Kobo. The Amazon Kindle price was £4.31.

4.99 - ( (4.99 - 4.31) + (4.31 * 0.1) ) = 3.88

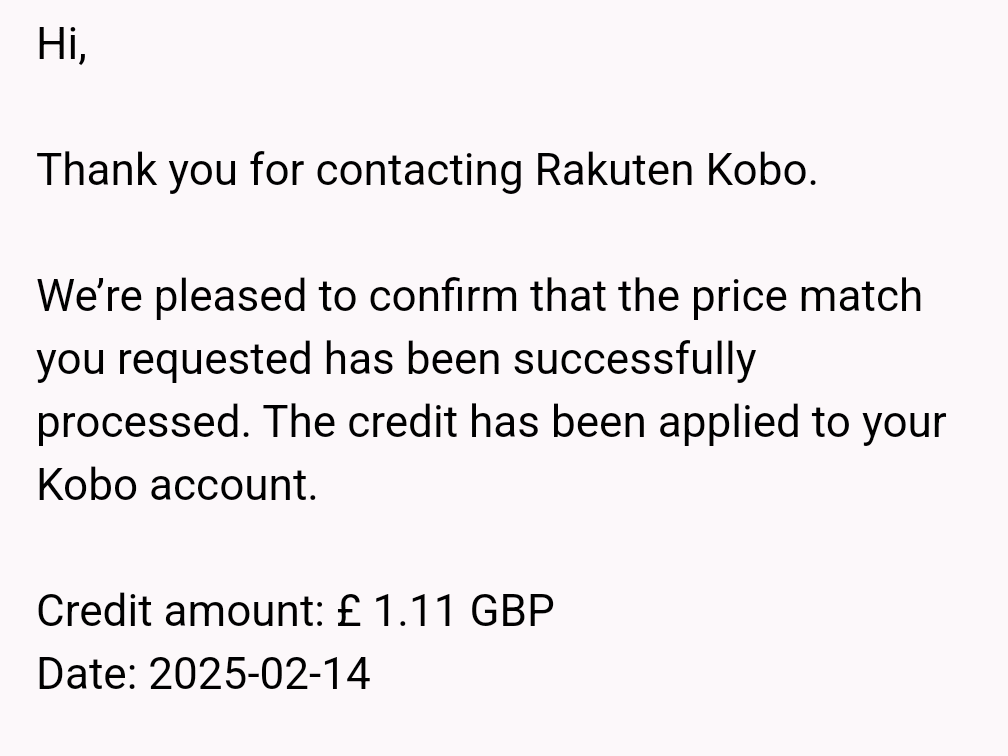

I purchased the book, sent a request for a price match, and got this email a few hours later:

OK! So what steps can we automate, and which will have to remain manual?

Amazon Pricing API

Amazon have a Product Advertising API. You will need to register for the Amazon Affiliate Program and make some qualifying sales before you get API access.

In order to search for an ISBN and get the price back, you need to send:

JSON

{ "Keywords": "isbn:9781473613546", "Resources": ["Offers.Listings.Price"], }

Using the updated Python API for PAAPI:

Python 3

from paapi5_python_sdk import DefaultApi, SearchItemsRequest, SearchItemsResource, PartnerType def search_items(): access_key = "ABC" secret_key = "123" partner_tag = "shkspr-21" host = "webservices.amazon.co.uk" region = "eu-west-1" api = DefaultApi(access_key=access_key, secret_key=secret_key, host=host, region=region) request = SearchItemsRequest( partner_tag=partner_tag, partner_type=PartnerType.ASSOCIATES, keywords="isbn:9781473613546", search_index="All", item_count=1, resources=["Offers.Listings.Price"] ) response = api.search_items(request) print(response) search_items()

(Add your own access key, secret key, and tag. You may need to change the host and region depending on where you are in the world.)

That returns something like:

JSON

{ "search_result": { "items": [ { "asin": "B09JLQHHXN", "detail_page_url": "https://www.amazon.co.uk/dp/B09JLQHHXN?tag=shkspr-21&linkCode=osi&th=1&psc=1", "offers": { "listings": [ { "price": { "amount": 2.99, "currency": "GBP", "display_amount": "£2.99" } } ] } } ] } }

(I've truncated the above so it only shows the relevant information.)

Kobo ISBN & Price

Let's get the ISBN and Price of a book on Kobo. There's no easy API to do this. But, thankfully, Kobo embeds some Schema.org metadata.

Look at the source code for https://www.kobo.com/gb/en/ebook/venomous-lumpsucker-1

HTML

<div id="ratings-widget-details-wrapper" class="kobo-gizmo" data-kobo-gizmo="RatingAndReviewWidget" data-kobo-gizmo-config ='{"googleBook":"{\r\n \"@context\": \"http://schema.org\",\r\n \"@type\": \"Book\",\r\n \"name\": \"Venomous Lumpsucker\",\r\n \"genre\": [\r\n \"Fiction \\u0026 Literature\",\r\n \"Humorous\",\r\n \"Literary\"\r\n ],\r\n \"inLanguage\": \"en\",\r\n \"author\": {\r\n \"@type\": \"Person\",\r\n \"name\": \"Ned Beauman\"\r\n },\r\n \"workExample\": {\r\n \"@type\": \"Book\",\r\n \"author\": {\r\n \"@type\": \"Person\",\r\n \"name\": \"Ned Beauman\"\r\n },\r\n \"isbn\": \"9781473613546\" …'> </div>

Getting the data from the data-kobo-gizmo-config is a little tricky.

- Using Python Requests won't work because Kobo seem to run a JS CAPTCHA to detect scraping.

- There is a Calibre-Web Kobo plugin but it requires you to have a physical Kobo eReader in order to get an API key.

- The Rakuten API is only for the Japanese store.

So we have to use the Selenium WebDriver to scrape the data:

Python 3

from selenium import webdriver from bs4 import BeautifulSoup import json # Open the web page browser = webdriver.Firefox() browser.get("https://www.kobo.com/gb/en/ebook/venomous-lumpsucker-1") # Get the source html_source = browser.page_source # Soupify soup = BeautifulSoup(html_source, 'html.parser') # Get the encoded JSON Schema schema = soup.find_all(id="ratings-widget-details-wrapper")[0].get("data-kobo-gizmo-config") # Convert to object from JSON parsed_data = json.loads(schema) # Decode the nested JSON strings parsed_data["googleBook"] = json.loads(parsed_data["googleBook"]) # Get ISBN and Price price = parsed_data["googleBook"]["workExample"]["potentialAction"]["expectsAcceptanceOf"]["price"] isbn = parsed_data["googleBook"]["workExample"]["isbn"] print(isbn) print(price)

Kobo Wishlist

OK, nearly there! Given a Kobo book URl we can get the price and ISBN, then use that ISBN to get the Kindle price. But how do we get the Kobo book URl in the first place?

I'm adding all the books I want to my Kobo Wishlist.

Inside the Wishlist is a scrap of JavaScript which contains this JSON:

JSON

{ "value": { "Items": [ { "Title": "Venomous Lumpsucker", "Price": "£2.99", "ProductUrl": "/gb/en/ebook/venomous-lumpsucker-1", } ], "TotalItemCount": 11, "ItemCountByProductType": { "book": 11 }, "PageIndex": 1, "TotalNumPages": 1, } }

(Simplified to make it easier to understand.)

Although there's a price, there's no ISBN, So you'll need to use the "ProductUrl" to get the ISBN and Price as above.

Sadly, unlike Amazon, there's no way to publicly share a wishlist. Getting the JSON requires logging in, so it's back to Selenium again!

This should be enough:

Python 3

from selenium import webdriver from selenium.webdriver.common.by import By from selenium.webdriver.common.keys import Keys from bs4 import BeautifulSoup import time browser = webdriver.Firefox() browser.get("https://www.kobo.com/gb/en/account/wishlist") # Log in username_box = browser.find_element(By.NAME, "LogInModel.UserName") username_box.clear() username_box.send_keys('you@example.com') password_box = browser.find_element(By.NAME, "LogInModel.Password") password_box.clear() password_box.send_keys('p455w0rd') password_box.send_keys(Keys.RETURN) time.sleep(5) # Wait for load and rendering

But the Kobo presents a CAPTCHA which prevents login.

There is an unofficial API which, sadly, doesn't seem to work at the moment.

Next Steps

For now, I'm saving specific Kobo book URls into a file and then running a scrape once per day. Hopefully, the unofficial Kobo API will be working again soon.

8 thoughts on “Automatic Kobo and Kindle eBook Arbitrage”

I wonder if this API is what https://booko.com.au uses... Or if they perhaps provide an API that can be used in lieu of having to meet Amazon's criteria?

Tim Wisniewski

Nice! I’ve been thinking about switching to kobo all week because of The upcoming lock-in. Have you managed to export all your books already? Apparently you have to do it one by one. I was thinking of building a similar script to automate that, using playwright or something.

@edent

There are many scripts available. I used https://github.com/bellisk/BulkKindleUSBDownloader

@Edent nice find with the embedded JSON for the Kobo Wishlist! i've been trying to scrape the wishlist in order to fetch the books into my "To Read" list on my static site.

were you able to get around the captcha Kobo uses? when using a headless browser (like Puppeteer), i just got the "are you a human?" prompt when drying to sign in to my Kobo account.

| Reply to original comment on hachyderm.io

@brookie nope, couldn't get through it.

I'm hoping this issue can be fixed though - https://github.com/subdavis/kobo-book-downloader/issues/121

403 Client error when trying to add user · Issue #121 · subdavis/kobo-book-downloader

| Reply to original comment on mastodon.social

Zbigniew Ziobro

Easy to remove drm is still drm and they might strengthen it later. Don't buy from kobo too.

@edent

I fundamentally disagree. Once I've stripped the DRM, it doesn't exist. I can back up my book, transfer them to any reader, and do what I want with them. If they later strengthen the DRM, it won't matter because I've already removed it.

I want to extricate my reading habit from Amazon.

| Reply to original comment on www.stephenparks.org

More comments on Mastodon.

What links here from around this blog?