If you hang around with computerists long enough, they start talking about the Semantic Web. If you can represent human knowledge in a way that's easy for computers to understand it will be transformative for information processing.

But computers, traditionally, haven't been very good at parsing ambiguous human text.

Suppose you saw this text written for a human:

Our opening hours are: Weekdays 10 until 7. Weekend 10 until 10 (Early closing 9 o'clock Sunday).

Not the most straightforward sentence, but pretty easy for a human to parse.

Until recently, the best way to represent that for a computer was something like:

HTML

<meta itemprop="openingHours" content="Mo-Fr 10:00-19:00"> <meta itemprop="openingHours" content="Sa 10:00-22:00"> <meta itemprop="openingHours" content="Su 10:00-21:00">

or

HTML

<script type="application/ld+json"> { "@context": "https://schema.org", "openingHours":["Mo-Fr 10:00-19:00", "Sa 10:00-22:00", "Su 10:00-21:00"] } </script>

A tightly constrained vocabulary which can be precisely parsed by a simple state-machine. Easy to ingest, interpret, and query. Easy for machines, that is. As much as I love the semantic web, it is hard for humans to write, update, and maintain.

But we have AI now. So do we need to mark up documents specifically for machines?

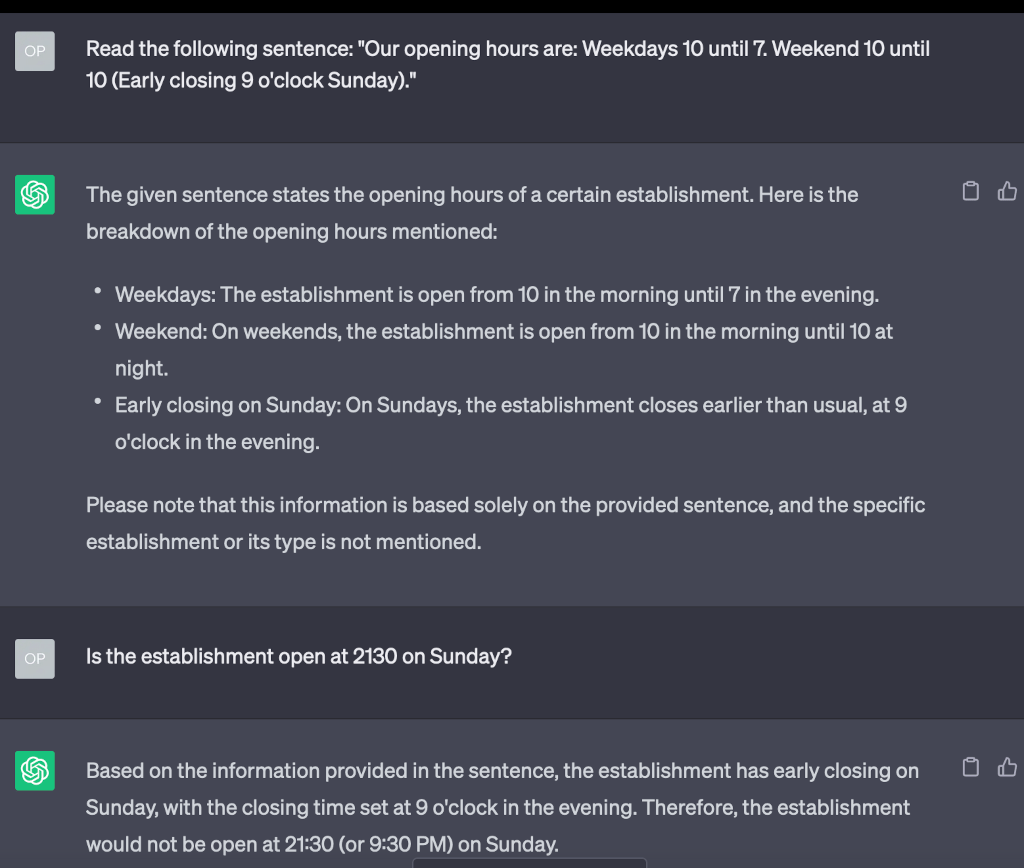

I fed the text into OpenAI's ChatGPT. Here's what it said:

It isn't just capable of parroting back data - it can perform moderately complex reasoning:

It isn't just capable of parroting back data - it can perform moderately complex reasoning:

Do we need to write for computers any more? One of the demands of the Semantic Web was that we should use HTML elements like <address> to clearly mark up a contact address and we should wrap dates and times in the <time> element.

Is that now redundant?

We still need to write clearly and unambiguously. But do we need separate "machine-readable" HTML if machines can now read and interpret text designed for humans?

4 thoughts on “Does AI mean we don't need the Semantic Web?”

@Edent It's a fun idea. I'm busy thinking about how you unit-test this stuff.

Because it's not really that "AI can do this." It's that AI can do X thing at high probability, but is going to give a wrong or unparseable answer some percentage of the time. So AI can accept a much wider range of input, with the need for fallbacks some of the time.

That's a serious difference in how you architect your apps.

| Reply to original comment on ruby.social

K

No, absolutely not. Standardized syntax and semantics are extremely useful.

For starters, you've picked what is possibly the simplest possible use case. There are a million common cases that today's "AI" can't handle -- or worse, claim to handle but get horribly wrong. It's not as simple as "we have AI now". With an HTML tag, I can be sure it's correct. How can you be sure your English sentence will be parsed correctly by whatever "AI" a user might have? We tried letting 2 or 3 different browser engines handle details their own way, and it was a nightmare for everyone. Giving up on standardization means we'll be back to the bad old days of "Best viewed with ___" badges, only now without even determinism.

Second, it's true that "it is hard for humans to write, update, and maintain" raw HTML, but when was the last time any (non-programmer) had to do that? We have tools to handle this for us. I can't whistle a phone number, either, yet I manage to make phone calls. It's far, far easier to make a decent UI than it is to write, update, and test a set of text-processing AIs for all these possible use cases.

Third, you've chosen a specific layer of the stack to give up and trust your content to "AI". Why here? One could as easily ask "Does AI mean we don't need HTML?" Just write some English text that says what to draw on the screen, and let the user-agent handle it. Does AI mean we don't even need TCP?

There was a period when we thought Postel's Law was a good idea, but it turns out that the way to climb the abstraction ladder is to be stricter with our specifications, not looser. (Imagine that USB claimed to solve the cable compatibility problem by declaring that USB 5 has no fixed protocol, and the "AI" on each end would figure out the best way to communicate. Nobody would trust that to work!) I don't want everybody's web browser to suddenly forget how to read part of my webpage because they downloaded the latest LLM update for their browser and find that Gen-Alpha is talking differently than me.

More comments on Mastodon.

Trackbacks and Pingbacks

[…] 详情参考 […]

[…] Does AI mean we don’t need the Semantic Web? […]