Image files are a grid of pixels - each pixel contains colour information0. But they don't just have to contain colour information. Here are some thoughts on other things that a future image format might contain.

What exists already?

A typical bitmap image looks like this under the hood:

TEXT 0 1 2 3

0 Black Red Red Blue

1 Red White Blue Yellow

2 Orange Purple Green Brown

...

That is a grid of pixels, each with a colour value.

Modern image formats can also contain pixel-level detail about transparency. When an image is displayed above a different image, the computer calculates how much of the lower image's colour is mixed with the upper image's colour.

Some image formats also allow pixel-level detail about brightness1. A device might be able to set the brightness on individual parts of the image.

So you end up with an image which looks like:

TEXT 0 1 2 3

0 Black Red - but 50% transparent Red Blue

1 Red - but really dim White - as bright as possible Blue Yellow

...

Thermal / Infrared

Most image formats only deal with visible light. But there are a bunch of cameras which can capture infrared. Would it be useful to capture the heat of scene being photographed?

A future image format could contain details about visible and invisible light.

Invisible Wavelengths

Objects in the real worlds don't just give off thermal signatures - they often have information in the ultraviolet wavelengths as well.

For example, flowers often have "landing guides" which are only visible to bees and other insects.

Being able to capture beyond visible light may be a useful property for an image format.

Depth

Modern cameras often fire out an invisible infrared grid to allow for quick focussing and to aid with depth effects like bokeh. An image could store the depth map of its subject.

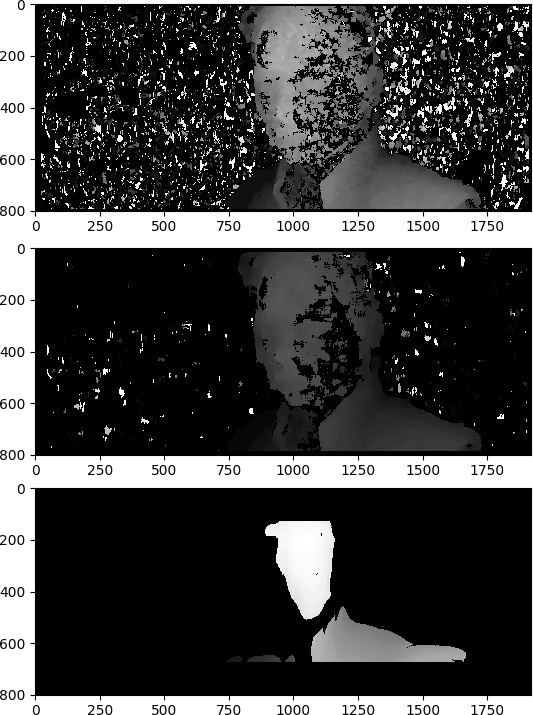

Here's a series of depth maps I made from analysing 3D movies:

This could allow for better 3D displays, or for easier image editing.

Angle

Images are typically 2D. The present pixels on a flat rectangular plane. But they aren't limited to that. Image panoramas and "spherical" images allow for display on a non-flat surface.

Here's an equirectangular 2D image which is rendered as a sphere:

An image could contain pixel-level data showing the angle away from the viewer. That would allow for a more accurate rendering in VR or other non-flat displays.

Object Detection

Now we start to get funky! AI is getting pretty good at detecting specific objects in photographs. What happens if we start marking them up within the image itself?

Either something as basic as this:

Or as detailed as this:

That would make it possible to easily remove or replace objects from images.

Combined with depth-sensing, it could be a powerful way to edit images.

Extra Texture

Colour replication depends heavily on the material which is imbued with that colour. That's what Pantone is all about2. Suppose an object has a sky-blue colour - is it useful to know that it is a heavy woollen jumper rather than a thin nylon t-shirt?

Wheels within wheels and layers within layers

It's probably sensible to implement something like this as multiple layers, rather than saying every pixel must be RGBA+Depth+Heat+Angle+Object+etc+etc.

Different layers could have different resolution (Infrared is typically lower res than the image it is overlayed on). Different layers might be compressed more efficiently by different algorithms.

We're used to layered images in formats like PSD and XCF - so why not in a future version of JPEG or AVIF?

What else?

What other things might be useful for an image to store at an individual pixel-level? Drop a note in the comments?

6 thoughts on “Other pixel-level meta data you could put in an image format”

@Edent the only software patent (yes, I know) I’ve ever been involved in was to do with adding depth and material data per pixel, for the ill-fated image editor “Piranesi” https://en.m.wikipedia.org/wiki/Piranesi_(software)It reconstructed the 3D scene from the depth map and used that to scale/orient brushes to the surface you were painting on. Fantastic for one-off images, but really hard to edit as there was only one depth-map so changes were destructive.Piranesi (software) - Wikipedia

| Reply to original comment on mastodon.online

@Edent Have you heard of the game Terratech? In it people build vehicles and factories. They can share their creations by exporting them as PNG images. The game embeds instructions for how the object is built from constituent parts inside the image. As long as the image is not rewritten it can be viewed and copied about by anything. Very clever!https://www.gamedeveloper.com/business/how-terratech-uses-steganography-for-sharing-player-generated-contentHow TerraTech uses steganography for sharing player-generated content

| Reply to original comment on social.bitfolk.com

@Edent The TIFF file format has support for multi-spectral data, which I believe is used in the geo world for embedding layers in a single image

| Reply to original comment on mastodon.me.uk

Ivan

Hyperspectral images! Instead of puny RGB colours for us poor (mostly) trichromatic Homo Sapiens, sample a spectrum of intensity as a function of wavelength at many wavelengths at every (x,y) point of the image. Current implementations range from file formats (FITS, HDF, NetCDF) to levels above the file format (NumPy arrays with certain named fields, MATLAB objects of certain class). The resulting data cubes are frequently larger in the wavelength dimension than they are in the (x,y) dimensions.

Robin

@toby_jaffey is right. In a single image you could have RGB + near infrared + landcover codes (eg water=0, forest=3, etc.).

NASA and ESA publish their satellite imagery with a ton of metadata like cloud mask, brightness, fire mask, pixel-level error codes, etc. It’s incredibly rich!

And that doesn’t even consider RADAR imagery, which is a whole other thing.

Iain W

Much of this metadata is common in the ML dataset space, or indeed modern phone cameras.

Take a portrait mode photo on an iPhone - you can extract the depth info from it, or the video out a Live Photo. They have semantic labels attached from ml processes to enable content search (though I’ve not checked if they’re a separate database or in the exif)

More comments on Mastodon.