I'm doing an apprenticeship MSc in Digital Technology. In the spirit of openness, I'm blogging my research and my assignments.

This is my paper from the PP1 module - where I take some CPD related to my profession. I picked Machine Learning in Python.

I've blogged about the course itself. The middle two parts of this paper are about that - why I chose it and how I put it into practice. The first and fourth parts are, as far as I can tell, unrelated. We have to write about reflection in the workplace. I am not very introspective, and I don't really enjoy it. So it was somewhat tedious to churn out. The final part was about ethics which at least had the advantage of being interesting.

Nevertheless, I was happy with a mark of 76%. (In the English system 50% is a pass, 60% is a commendation, 70% is distinction.)

I used my algorithm to write an assignment to complete this.

A few disclaimers:

- I don't claim it to be brilliant. I am not very good at academic-style writing.

- It is fairly inaccurate. Many of the concepts on reflection were not relevant to my workplace, so there is a lot of fudging.

- This isn't how I'd write a normal document for work - and the facts have not been independently verified.

- This isn't the policy of my employer, nor does it represent their opinions. It has only been assessed from an academic point of view.

- It has not been peer reviewed, nor are the data guaranteed to be an accurate reflection of reality. Cite at your own peril.

- I've quickly converted this from Google Docs + Zotero into MarkDown. Who knows what weird formatting that'll introduce!

- All references are clickable - going straight to the source. Reference list is at the end, most links converted using DOI2HT.ML.

And, once more, this is not official policy. It was not commissioned by anyone. It is an academic exercise. Adjust your expectations accordingly.

Abstract

In this paper, the author reviews several models of reflection and determines which is most suitable for their current industry and workflow.

The author then describes the selection process of a Continual Professional Development course. This is done by examining their Personal Development Plan, and in conjunction with the needs of their employer.

After taking a short course on statistics and algorithms, the author discusses their application to a specific cybersecurity issue within Government (the specific details are deliberately kept confidential due to national security concerns - as agreed by lecturers). They investigate how this sort of analysis can be integrated into a modern data process. They also discuss other methods of analysing data and how they compare to the recently acquired knowledge. This section concludes with a reflection on the application of this newly acquired knowledge.

Finally, the author looks at how a culture of reflection can develop within their organisation, and how that integrates with the needs and responsibilities of a modern data analytics professional.

1. A critical review of three reflective models

The author has rarely experienced structured reflection in the workplace. Although the UK Civil Service engages in annual appraisals (Fletcher, 2008), they are often performative rather than substantive. Additionally, there is little evidence that reflection makes a difference in a digital organisation (Dors et al., 2020). This lack of evidence makes it challenging to compare the utility of different reflective models.

The author's review of the assigned reading material suggests that the three most common models across a variety of industries are "DIEP" (Rogers, 2001), "What? So what? Now what?" (Driscoll, 2007), and Moon's levels of learning (Moon, 2013). These are evaluated by the author in Table 1.

| Table 1 - Evaluation of three reflective models | |||

| DIEP | What? So what? Now what? | Moon's levels of learning | |

| Strengths | Structured model which is well used in industry.

Can be used for individuals or teams. |

Brief, and easy to complete

Option to drill down into each question for further reflection. |

Relies on a "common sense" understanding between users.

Well understood for reflections on learning. |

| Weaknesses | Model is rigid and may not apply in every situation.

Takes up a lot of time. |

Primarily designed for the nursing profession.

Limited use / acceptance in digital workplaces. |

Several steps - so may be burdensome to complete.

Overly focused on emotions, to the exclusion of more practical matters. |

1.1 Sources

Reflection generally focuses on the writer's "experiences, thoughts, feelings and assumptions" (Bassot, 2016 (p. 16)). The author recognises there is an urgent need for better reflective models which take into account the different ways that individuals relate to reflection (Roberson et al., 2021). Structured reflective practice also needs to take into account that different people may have different learning styles (Pashler et al., 2008).

1.2 Comparison of practices

The author's organisation relies heavily on "Capability Frameworks" in order to judge the competencies of staff (Uk Government, 2020). The Digital, Data and Technology framework provides a model whereby staff can self-assess against a series of skills and behaviours. The ability for staff and their managers to be able to reflect on performance in a structured way is necessary for the continued professionalisation of technologists working in the Civil Service (Thornton, 2018). However, "soft skills" are considered essential to the modern workplace (Lepeley et al., 2021). In the author's opinion, a purely technical approach to reflection will therefore be limited.

Theoretical frameworks have not been well received in the author's organisation. Instead, there is a strong focus on the “technical‐rational approach” (Chivers, 2003). For organisations which are focused on delivering technical outcomes, the author believes that most reflective models are too focused on an individual's feelings. While workplaces have to take pastoral care of their staff, there is a trend in modern workplaces to become "neo-paternalistic" (Leclercq-Vandelannoitte, 2021) and attempt to cater to the development needs of their staff. Given the range of personality types in the typical workspace (Russo & Stol, 2020) it is not practical for an employer to impose a "one size fits all" reflective model for their employees to follow.

1.3 Conclusion

In the author's opinion, DIEP provides a reasonable model for general reflection without an over-reliance on emotional introspection. This is more suitable for people who are neurodiverse and struggle with articulating and assessing their emotions.

2. A discussion of the selection of CPD in relation to the wider technical area

2.1 Description

I chose a course designed to enhance the skills of people with basic data science skills - the full details of which are in Appendix C. It was based on the Python programming language developed by Guido van Rossum (Pajankar, 2022). It covered the basics of Extract-Transform-Load (ETL) (Theodorou et al., 2017) - whereby data are read, corrected, and manipulated. It also taught relevant Machine Learning (ML) packages such as numpy, pandas, MatPlotLib and SciKitLearn. This was particularly important for me because these Python packages are used extensively in the UK Government (GitHub, 2022). Outside of Government, Python is one of the most widely used programming languages for ML (Stancin & Jovic, 2019).

2.2 Relevance

Data science has proved invaluable to the worldwide response to the COVID‑19 pandemic - with Python and open source workflows proving popular (Malarvizhi et al., 2021). While analytics has a long history - especially in Government responses to medical data (Anderson, 2011) - ML is relatively new and has only become practical thanks to the rapid increases in computer processing speeds (Fradkov, 2020).

I consider ML to be a suitable way to harness advances in stochastic models to investigate complex problems, in a manner similar to humans but at greater speed and accuracy.

2.3 Context

ML - and data science in general - is a priority for the current Government (Cummings, 2020). The ability to quickly and accurately model outcomes using data is a key pillar of the UK's National Data Strategy (Dowden, 2020). Departments across the organisation are already publishing guides on the use of ML (DSTL, 2020). As discussed above, ML is used widely outside of Government, and we wish to bring our organisation closer to industry norms.

2.4 Author's Role

My Personal Development Plan (see Appendix B) requires me to look for interesting opportunities. I felt that this CPD would help me expand my repertoire of skills and make me a more attractive candidate for future roles. It would build on my existing skills with the R statistical language and would enhance my ability to communicate effectively using data. I have recently started a new job which has a focus on protecting and maintaining part of the UK's Critical National Infrastructure (Weiss & Biermann, 2021) - specifically, its domain names. I needed a course which would enable me to quickly and comprehensively analyse large quantities of data and to make my analyses open, reproducible, and engaging.

2.5 Possible Alternatives

I also considered whether there were more suitable courses available. One option was a course in Cloud Operations. Governments across the world are investing heavily in moving computing resources to the Cloud (Busch et al., 2014). In collaboration with my manager, we concluded that our Cloud team was already fully resourced, so that course was rejected.

2.6 Evaluation

As my career progresses, I expect to have to engage more with the analysis and presentation of complex data sets. Given the strong emphasis on using ML within the UK Government, and Python's established use in my department, this is a suitable course for me at this stage of my career. I hope that the skills I gain will enable me to publish open source code and enhance the reputation of my department for publishing cutting-edge analytics.

3. An overview of how the CPD has been applied

3.1 An overview of the CPD

The course was based on the Python programming language (see Appendix C). The course taught the basics of both Pandas and Numpy - which are popular packages for visualising and analysing data. It briefly touched on modern ML techniques.

3.2 Identification of specific projects for which this CPD has had an impact.

Since the start of the COVID‑19 Pandemic, statistics and data investigation has become hugely important to the public. The power of predictive data and analytics is now at the forefront of the political agenda, with data being at the heart of the UK National Action Plan for Open Government (CDDO, 2022). It is believed that opening and analysing government data could generate huge public value (Zhang et al., 2015).

The specific project involves the Government's commitment to securing critical national infrastructure. My team has been given responsibility for analysing certain patterns of behaviour. With a large historic dataset, the team was asked to analyse the data to understand what insights could be gained. The aim was to make predictions about current data based on historic data.

A fundamental part of understanding the insights to be gained from ML is the "Cross-industry standard process for data mining" (CRISP-DM) (Chapman et al., 2000). This cyclic method is illustrated in Figure 1 below.

The use of Python's ML packages was essential to analysing and visualising the data. With a large historic corpus of events, I was able to train up an experimental model to look for patterns in the data which could be considered suspicious. By isolating some of the training data and keeping it as a validation set, I was able to test whether the model's accuracy was sufficient to enhance our team's work. The model was then tested on "live" data to see whether it could detect anomalies faster than our existing processes.

3.3 Detailed discussion and evidence of technical knowledge acquired in relation to the targeted apprenticeship standard

Having a large number of measurable parameters results in an unmanageable number of permutations of variables - this is known as the "curse of dimensionality" (Bellman, 1957). This still causes challenges for modern analytics. A key task for analysts is to remove excess information, and to understand which variables produce statistical noise rather than signal. Modern ML algorithms combined with increased computer speeds, makes it easier to investigate multi-dimensional data and rapidly see which dimensions have a statistically significant effect on the end result.

The data set neatly fits into the "4 Vs" model of "Big Data" (Kepner et al., 2014). Given the velocity of new data, volume of total data, the variety of data sources, and veracity of the data, there are challenges around how to analyse and investigate issues in a coherent way. Before attempting to model the data, it might be necessary to use techniques like MapReduce to make the processing and extraction of raw data easier (Dean & Ghemawat, 2008).

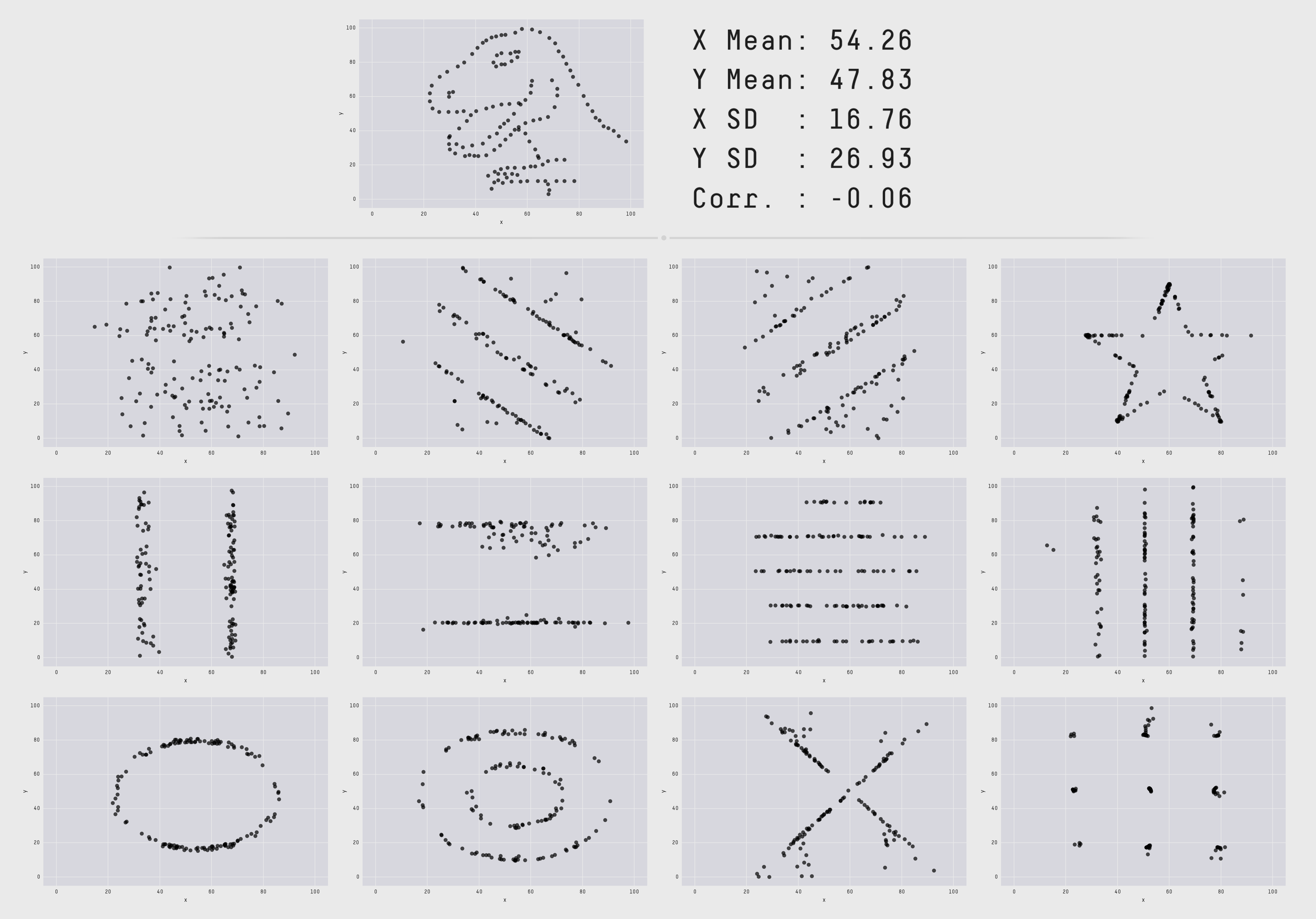

It is insufficient to rely on hypothesis testing as the primary method of investigation. Instead the practitioner should engage in Exploratory Data Analysis (EDA) (Tukey, 1977). EDA allowed me to investigate what sort of information the data were likely to yield, rather than starting from a position that there was a predetermined hypothesis which could be validated. By engaging in early visualisation, I got an immediate sense of the "shape" of the data. Relying purely on statistical observations is often insufficient for understanding what the data represents, as is demonstrated in Figure 2 below.

Figure 02: The "Datasaurus Dozen". A synthetically generated dataset which all have identical means, Pearson coefficients, and standard deviations - and yet all look radically different (Matejka & Fitzmaurice, 2017).

Figure 02: The "Datasaurus Dozen". A synthetically generated dataset which all have identical means, Pearson coefficients, and standard deviations - and yet all look radically different (Matejka & Fitzmaurice, 2017).

There is a crisis of reproducibility in science (Baker, 2016). Even mathematically focussed disciplines like economics have foundational papers which are based on flawed Excel spreadsheets (Herndon et al., 2014). One way this can be countered is by the publication of "reproducible builds" - that is, an information governance pipeline which shows all the steps taken to ingest, manipulate, and analyse the data. Jupyter Notebooks have quickly become one of the most popular tools for publishing information transparently (Perkel, 2018). The CPD gave me a thorough grounding in the basics of Jupyter Notebooks, and I have begun to investigate how they can be used with sensitive Government data, in alignment with the FAIR data principles (Wilkinson et al., 2016).

3.4 CPD application effects

The work on Jupyter notebooks has had a profound effect on how I present data and share it with colleagues. By understanding common models and key algorithms, I was able to create an analytical solution to the specific problem my organisation faced. This has reduced the time and effort spent by the organisation when analysing complex security-related data.

3.5 Prior & current practices

Prior practises were generally not based around a real-time "data-pipeline" (Psaltis, 2017). Data were often relegated to CSV and spreadsheets. Data were occasionally stored on cloud service spreadsheets. This made systematic analysis difficult and led to a lack of data consistency. Without a defined data schema, it was not possible to get data from various sources in the same format - this led to several manual processes for ETL. The result was that data analysis was performed sporadically and there was a lack of homogeneity in presentations to senior stakeholders.

3.6 Alternative approaches

An alternative would have been to open the raw data to allow crowd-sourcing of threat intelligence - the so-called "armchair auditor" model (O’Leary, 2015). While it might be possible that casual observers could find threats in the data, and alert us to them, there are several risks. Opening sensitive log data could inadvertently expose vulnerabilities of which we were not aware, and which may be used against us. A middle-ground might be to publish santised historical data in the open and include Jupyter Notebooks with reproducible results. This would allow other researchers to build ML models based on real data.

3.7 Reflection on the application of the CPD

I will reflect on the application of the CPD using the DIEP model (Rogers, 2001) as discussed in §1.3:

Describe

An advanced course in ML with Python. It covered modern tools and generally accepted statistical techniques. I was able to apply some of these techniques to analyse our data.

Interpret

While my background gives me confidence in statistics, and I was reasonably familiar with R, I wished to gain more modern knowledge of how to apply new tools and techniques. Some of these new tools were useful.

Evaluate effectiveness

I found the use of Jupyter Notebooks to demonstrate the application of knowledge to be an excellent tool. I look forward to bringing that paradigm back into the workplace. Through this experience, I learned new techniques and became aware of how to apply my existing knowledge to a new subset of problems.

Plan for the future

I intend to publish more data analysis as reproducible and interactive notebooks. I will encourage my peers to do the same. I will engage further with the analytics profession in my organisation.

4. How a culture of ethics can be developed in the author's organisation

4.1 Development of ethical culture

The Civil Service relies on ethical behaviour from its employees in order to ensure that there is confidence in the way that the state is operated. Additionally, a culture of ethics is a necessary precondition for both social and economic progress (Murtin et al., 2018). In her 2002 Reith Lecture, the legislator Baroness O'Neil remarked that people's inability to investigate sources of information, and the disparate nature of sources, would lead to a fragmentation of trust (O’Neill, 2010).

It is unethical to have laws which are not open to public scrutiny (Kutz, 2008) - therefore the author considers it similarly unethical to base laws and policies on data which are not publicly available. The author concludes that the open publication of data and analysis is key to developing an ethical culture.

4.2 Practical Concerns

How does the Civil Service develop a culture which is ethical? Mandatory training is common in most organisations, although it is sometimes used to shift the liability for infractions from employer to employee (Allay (UK) Ltd v Gehlen, 2021). Training alone isn't enough to improve employee behaviour when it comes to protecting their own health (Martimo et al., 2008), and there's evidence that compliance training on ethical matters makes no difference to employee's behaviour (Dobbin & Kalev, 2018).

Within the author's organisation, there are informal reflective practices like show and tells. There are also formalised processes which form part of the design process. Finally, experiments with data are subject to ethics board approvals. This mixture of personal training and systemic attitudes to ethical issues should culminate in a culture which values and embodies ethical behaviour.

4.3 Role of professional

In the UK, approximately 72% of roles are unregulated (Tamkin et al., 2013). The information technology industry has resisted the application of ethical practices set by regulatory bodies. The medical profession, for example, has a long history of embracing formal standards - such as the Hippocratic Oath (Hulkower, 2016). Other professions, such as Canadian engineers, hold elaborate "Ritual of the Calling of an Engineer" ceremonies to impress upon graduates the necessity for ethical behaviour (Osman, 1984).

In contrast, information technology practitioners have non-mandatory professional bodies, such as the BCS and ACM. Historically, their codes of ethics (see Appendix D) are rarely enforced (Layton, 1986), and modern institutions are also similarly toothless (Sandy, 2005). The author considers this lack of formal and enforceable ethical conduct to be a deficiency when it comes to developing ethical norms.

In recent years, there has been a growth in the popularity of the "Code of Conduct" (CoC). These are documents which set out acceptable standards of behaviour for those participating in events, or as contributors to open source projects. Despite their overwhelming popularity in recent years (Tourani et al., 2017), there has been some resistance and hostility to CoCs. Some people believe their presence stifles free-speech and creativity (Whittington, 2018). The author believes CoCs set clear templates for behaviour, which removes the ambiguity which some people use as cover for their unethical behaviour. The author also considers CoCs to be an excellent way of tracking the changing nature of ethical norms within our communities - which will increase the industry's diversity and inclusivity.

4.4 Other factors

The author considers that the reputational risk of unethical practices should be foremost in the mind of Data Analysts. The tarnished reputations of organisations which engage in unethical methods, like Facebook (Véliz, 2020), should serve as a warning that ethical behaviour is not seen as optional in the public's consciousness. Regular competitive analysis should be performed, with focus on public perception of competitors' ethics. The author feels that this would help their organisation develop better ethical practices.

Regulations like GDPR & the Equality Act provide rules which an ethical professional cannot transgress. But the author does not consider that the law is the only method by which to determine morals, nor that teleological moral theories are sufficient. Instead, professionals need to have a thorough grounding in the kategorischer Imperativ (Kant, 1785). Data Analysts also need to be aware of modern guidelines which can help them develop a culture of ethics. The author recommends that close cooperation with the UK's Centre for Data Ethics should be a prerequisite of anyone working with sensitive data. Finally, the Civil Service Code sets out clear expectations of ethical behaviour for government employees (Fuertes, 2021).

4.5 Conclusion

There is a complex relationship between ethics and the data professional. It is not sufficient for a modern analyst to divorce their analytical process from the actions taken as a result of the analysis.

For example, it would have been unethical for the author to report a suspicious interaction to the security services based on the data analysis without first ensuring the rigour of the data and understanding the biases present in any training set.

As discussed in §3.2, it is not ethical to draw inferences from apparent patterns in the data without a thorough business understanding - i.e. an expert's view of the data.

Similarly, the open and transparent publication of the ML models and algorithms used by Government departments should be seen as an important step to developing ethical behaviour.

On reflection, there needs to be a greater emphasis in the Civil Service not just of the mechanics and application of modern ML techniques - but also on how to conduct such investigations in an ethical manner. Having a mandatory professional body for practitioners with an enforceable set of ethics would improve trust in both the individual Civil Servant and the state.

References

- Allay (UK) Ltd v Gehlen, (4 February 2021)

-

Anderson

,

R. J.

Florence Nightingale: The Biostatistician

( ) CLOCKSS Archive . doi:10.1124/mi.11.2.1 -

Baker

,

Monya

1,500 scientists lift the lid on reproducibility

( ) Springer Science and Business Media LLC . doi:10.1038/533452a -

Bassot

,

Barbara

The Reflective Journal

( ) Macmillan Education UK . doi:10.1057/978-1-137-60349-4 - Bellman, R. (1957). Dynamic programming. Princeton Univ. Pr. 9780691146683

- Busch, P., Smith, S., Gill, A. Q., Harris, P., Fakieh, B., & Blount, Y. (2014). A study of government cloud adoption: The Australian context.

- CDDO. (2022, January 31). UK National Action Plan for Open Government 2021-2023. GOV.UK.

- Chapman, P., Clinton, J., Kerber, R., Khabaza, T., Reinartz, T., Shearer, C., & Wirth, R. (2000). CRISP-DM 1.0: Step-by-step data mining guide.

-

Chivers

,

Geoff

Utilising reflective practice interviews in professional development

( ) Emerald . doi:10.1108/03090590310456483 - Cummings, D. (2020, January 2). ‘Two hands are a lot’. Dominic Cummings’s Blog.

-

Dean

,

Jeffrey

&

Ghemawat

,

Sanjay

MapReduce

( ) Association for Computing Machinery (ACM) . doi:10.1145/1327452.1327492 -

Dobbin

,

Frank

&

Kalev

,

Alexandra

Why Doesn't Diversity Training Work? The Challenge for Industry and Academia

( ) Informa UK Limited . doi:10.1080/19428200.2018.1493182 -

Dors

,

Tania Mara

&

Van Amstel

,

Frederick M. C.

&

Binder

,

Fabio

&

Reinehr

,

Sheila

&

Malucelli

,

Andreia

Reflective Practice in Software Development Studios: Findings from an Ethnographic Study

( ) IEEE . doi:10.1109/cseet49119.2020.9206217 - Dowden, O. (2020). National Data Strategy. GOV.UK.

- Driscoll, J. (2007). Practising clinical supervision: A reflective approach for healthcare professionals.

- DSTL. (2020, December 7). Machine learning with limited data. GOV.UK.

- Fletcher, C. (2008). Appraisal, feedback and development: Making performance review work (4th ed). Routledge.

-

Fradkov

,

Alexander L.

Early History of Machine Learning

( ) Elsevier BV . doi:10.1016/j.ifacol.2020.12.1888 -

Fuertes

,

Vanesa

The rationale for embedding ethics and public value in public administration programmes

( ) SAGE Publications . doi:10.1177/01447394211028275 - GitHub. (2022). 195 results for all repositories written in Python. GitHub.

-

Herndon

,

T.

&

Ash

,

M.

&

Pollin

,

R.

Does high public debt consistently stifle economic growth? A critique of Reinhart and Rogoff

( ) Oxford University Press (OUP) . doi:10.1093/cje/bet075 -

Hulkower

,

Raphael

The History of the Hippocratic Oath: Outdated, Inauthentic, and Yet Still Relevant

( ) Albert Einstein College of Medicine . doi:10.23861/ejbm20102542 - Kant, I. (1785). Grundlegung zur Metaphysik der Sitten. Hartknoch.

-

Kepner

,

Jeremy

&

Gadepally

,

Vijay

&

Michaleas

,

Pete

&

Schear

,

Nabil

&

Varia

,

Mayank

&

Yerukhimovich

,

Arkady

&

Cunningham

,

Robert K.

Computing on masked data: a high performance method for improving big data veracity

( ) IEEE . doi:10.1109/hpec.2014.7040946 - Kutz, C. (2008). The repugnance of secret law. USC Center for Law and Philosophy.

- Layton, E. T. (1986). The revolt of the engineers: Social responsibility and the American engineering profession (Johns Hopkins pbk. ed). Johns Hopkins University Press.

-

Leclercq-Vandelannoitte

,

Aurélie

The new paternalism? The workplace as a place to work—and to live

( ) SAGE Publications . doi:10.1177/13505084211015374 - Lepeley, M. T., Beutell, N. J., Abarca Melo, N., & Majluf, N. S. (2021). Soft skills for human centered management and global sustainability.

-

Malarvizhi

,

Anusha Srirenganathan

&

Liu

,

Qian

&

Sha

,

Dexuan

&

Lan

,

Hai

&

Yang

,

Chaowei

An Open-Source Workflow for Spatiotemporal Studies with COVID-19 as an Example

( ) MDPI AG . doi:10.3390/ijgi11010013 -

Martimo

,

Kari-Pekka

&

Verbeek

,

Jos

&

Karppinen

,

Jaro

&

Furlan

,

Andrea D

&

Takala

,

Esa-Pekka

&

Kuijer

,

P Paul F M

&

Jauhiainen

,

Merja

&

Viikari-Juntura

,

Eira

Effect of training and lifting equipment for preventing back pain in lifting and handling: systematic review

( ) BMJ . doi:10.1136/bmj.39463.418380.be -

Matejka

,

Justin

&

Fitzmaurice

,

George

Same Stats, Different Graphs

( ) ACM . doi:10.1145/3025453.3025912 -

Moon

,

Jennifer A.

Reflection in Learning and Professional Development

( ) Routledge . doi:10.4324/9780203822296 -

Trust and its determinants

( ) Organisation for Economic Co-Operation and Development (OECD) . doi:10.1787/869ef2ec-en -

O'Leary

,

Daniel E.

Armchair Auditors: Crowdsourcing Analysis of Government Expenditures

( ) American Accounting Association . doi:10.2308/jeta-51225 - O’Neill, O. (2010). A question of trust (5. printing). Cambridge Univ. Press.

-

Iron Ring [From the Editor]

( ) Institute of Electrical and Electronics Engineers (IEEE) . doi:10.1109/mcs.2016.2536098 -

Pajankar

,

Ashwin

Introduction to Python 3

( ) Apress . doi:10.1007/978-1-4842-7410-1_1 -

Pashler

,

Harold

&

McDaniel

,

Mark

&

Rohrer

,

Doug

&

Bjork

,

Robert

Learning Styles

( ) SAGE Publications . doi:10.1111/j.1539-6053.2009.01038.x -

Perkel

,

Jeffrey M.

Why Jupyter is data scientists’ computational notebook of choice

( ) Springer Science and Business Media LLC . doi:10.1038/d41586-018-07196-1 - Psaltis, A. G. (2017). Streaming data: Understanding the real-time pipeline. Manning.

-

Roberson

,

Quinetta

&

Quigley

,

Narda R.

&

Vickers

,

Kamil

&

Bruck

,

Isabella

Reconceptualizing Leadership From a Neurodiverse Perspective

( ) SAGE Publications . doi:10.1177/1059601120987293 - Rogers , Russell R. ( ) Springer Science and Business Media LLC . doi:10.1023/a:1010986404527

-

Russo

,

Daniel

&

Stol

,

Klaas-Jan

Gender Differences in Personality Traits of Software Engineers

( ) Institute of Electrical and Electronics Engineers (IEEE) . doi:10.1109/tse.2020.3003413 - Sandy, G. (2005). Ethical Leadership of ICT Professional Bodies.

- Spruce, H. (2020, January 6). Free Personal Development Plan (PDP) Example Template PDF. The Hub | High Speed Training.

-

Stancin

,

I.

&

Jovic

,

A.

An overview and comparison of free Python libraries for data mining and big data analysis

( ) IEEE . doi:10.23919/mipro.2019.8757088 - Tamkin, P., Miller, L., Williams, J., & Casey, P. (2013). Understanding Occupational Regulation. UK Commission for Employment and Skills, Evidence Report 67, 141.

-

Theodorou

,

Vasileios

&

Abelló

,

Alberto

&

Thiele

,

Maik

&

Lehner

,

Wolfgang

Frequent patterns in ETL workflows: An empirical approach

( ) Elsevier BV . doi:10.1016/j.datak.2017.08.004 - Thornton, D. (2018). Professionalising Whitehall: Responsibilities of the Head of Function for Digital, Data and Technology. 12.

-

Tourani

,

Parastou

&

Adams

,

Bram

&

Serebrenik

,

Alexander

Code of conduct in open source projects

( ) IEEE . doi:10.1109/saner.2017.7884606 - Tukey, J. W. (1977). Exploratory data analysis. Addison-Wesley Pub. Co.

- UK Government. (2020, December 4). Digital, Data and Technology Profession Capability Framework. GOV.UK.

- Véliz, C. (2020). Privacy is power: Why and how you should take back control of your data.

-

Weiss

,

Moritz

&

Biermann

,

Felix

Cyberspace and the protection of critical national infrastructure

( ) Informa UK Limited . doi:10.1080/17487870.2021.1905530 - Whittington, K. E. (2018). Free Speech and the Diverse University (SSRN Scholarly Paper ID 3334118). Social Science Research Network.

-

Wilkinson

,

Mark D.

&

Dumontier

,

Michel

&

Aalbersberg

,

IJsbrand Jan

&

Appleton

,

Gabrielle

&

Axton

,

Myles

&

Baak

,

Arie

&

Blomberg

,

Niklas

&

Boiten

,

Jan-Willem

&

da Silva Santos

,

Luiz Bonino

&

Bourne

,

Philip E.

&

Bouwman

,

Jildau

&

Brookes

,

Anthony J.

&

Clark

,

Tim

&

Crosas

,

Mercè

&

Dillo

,

Ingrid

&

Dumon

,

Olivier

&

Edmunds

,

Scott

&

Evelo

,

Chris T.

&

Finkers

,

Richard

&

Gonzalez-Beltran

,

Alejandra

&

Gray

,

Alasdair J.G.

&

Groth

,

Paul

&

Goble

,

Carole

&

Grethe

,

Jeffrey S.

&

Heringa

,

Jaap

&

’t Hoen

,

Peter A.C

&

Hooft

,

Rob

&

Kuhn

,

Tobias

&

Kok

,

Ruben

&

Kok

,

Joost

&

Lusher

,

Scott J.

&

Martone

,

Maryann E.

&

Mons

,

Albert

&

Packer

,

Abel L.

&

Persson

,

Bengt

&

Rocca-Serra

,

Philippe

&

Roos

,

Marco

&

van Schaik

,

Rene

&

Sansone

,

Susanna-Assunta

&

Schultes

,

Erik

&

Sengstag

,

Thierry

&

Slater

,

Ted

&

Strawn

,

George

&

Swertz

,

Morris A.

&

Thompson

,

Mark

&

van der Lei

,

Johan

&

van Mulligen

,

Erik

&

Velterop

,

Jan

&

Waagmeester

,

Andra

&

Wittenburg

,

Peter

&

Wolstencroft

,

Katherine

&

Zhao

,

Jun

&

Mons

,

Barend

The FAIR Guiding Principles for scientific data management and stewardship

( ) Springer Science and Business Media LLC . doi:10.1038/sdata.2016.18 -

Zhang

,

Jing

&

Puron-Cid

,

Gabriel

&

Gil-Garcia

,

J. Ramon

Creating public value through Open Government: Perspectives, experiences and applications

( ) IOS Press . doi:10.3233/ip-150364

Appendices

Appendix A – Apprenticeship Standard Specialist Pathway Skills

[Redacted]

Appendix B - PDP

This personal development plan follows a generally accepted template (Spruce, 2020)

|

What are your

long-term goals? |

What are your specific career goals? | What are the key skills needed for each one of your goals? |

What skills do you

need to work on? |

What actions are you going to take? | When are you going to complete your training by? |

| Do interesting things with technology for social good. | Complete MSc | Academic writing skills.

Critical reflection. Referencing. |

Critical reflection. | Attend ACE workshops. | 8-12 Months |

| Become an expert in DNS infrastructure | Understanding of common DNS tools.

Knowledge of DNSSEC. |

Knowledge of DNSSEC. | Take internal training.

Read reference books. Use O'Rielly video library. |

6 Months | |

| Open source the code which protects critical national infrastructure. | Influencing skills.

Knowledge of UK Intellectual Property laws, including Crown Copyright. |

Influencing skills | Work with colleagues to practise my skills. | 12-24 Months |

Appendix C - CPD Description

Available at https://www.qa.com/course-catalogue/courses/practical-machine-learning-qadmspml/

Appendix D - BCS Code of Conduct

(Taken from https://www.bcs.org/media/2211/bcs-code-of-conduct.pdf)