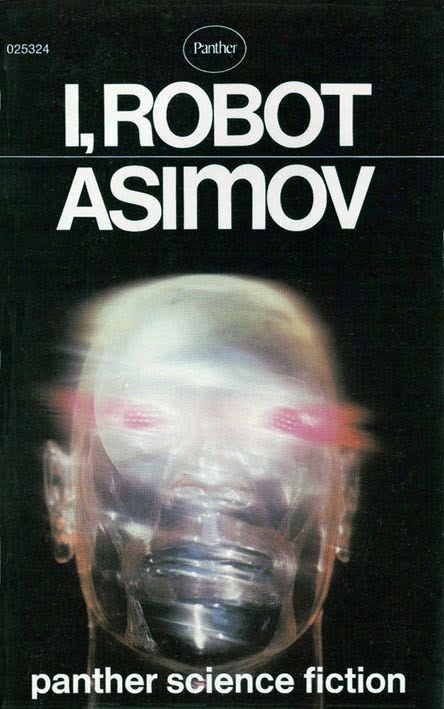

What happens when a robot begins to question its creators? What would be the consequences of creating a robot with a sense of humour? Or the ability to lie? How do we truly tell the difference between man and machine? In "I, Robot", Asimov sets out the Three Laws of Robotics – designed to protect humans from their robotic creations – and pushes them to their limits and beyond.

After attending a lecture on the ethics of self-driving cars, I decided to re-read Asimov's "I, Robot".

Any discussion of robots killing people inevitably returns to the supposed wisdom of the "3 laws of robotics".

I argue that the "3 laws" should be considered harmful.

Let's remind ourselves of the laws:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

The first thing to note is that these are a literary device - not a technical device. Killer robots are boring sci-fi. The interesting sci-fi is looking at "good" robots which inexplicably go bad. "I, Robot" is essentially a collection of entertaining logic puzzles. Æsthetics are not a good basis on which to build an algorithm for behaviour.

The second thing to note is that the laws are vague. What do we mean by "harm"? This is the lynchpin of one of the stories where (spoilers!) a telepathic robot avoids causing psychological pain.

Most people can't deal with complexity. Here's a common complaint:

Archimedes’ Principle: 67 words The Ten Commandments: 179 words The Gettysburg Address: 286 words The Declaration of Independence: 1,300 words The US government regulations on the sale of cabbage: 26,911 words As circulated by email from everyone's "kooky" relative

The claim above is utter nonsense - but laws are complex. The Ten Commandments may be short - but they cause a huge amount of ambiguity. What does "Thou shalt not kill" cover? Gallons of ink has been spilled interpreting those four words. Ironically, blood has been shed trying to resolve those ambiguities.

Would it have been helpful to have, from the start, 30,000 words explaining exactly what each rule meant?

Thirdly, these aren't natural laws. Too many people - including scientists - seem to think that these are hard-wired into robots! I've had conversations with otherwise rational people who haven't quite grasped that these aren't baked into the fabric of existence.

The "laws" are a harmful meme. That's testament to the power of Asimov's writing. His stories have infected every discussion of robotics and ethics. Even though we know it is fiction, something deep inside us recognises them as a necessary part of creating "acceptable" robots.

The 3 laws are brilliant for story-telling, but lousy for AI policy making.

5 thoughts on “"I, Robot" - the 3 laws considered harmful”

Alex Gibson

It's ironic that Asimov's Three Laws of Robotics seem to have stuck so firmly in the minds of the general public, almost none of whom have read the books, as an example of good practice in governing AI behaviour, when the main thrust of Asimov's stories where they feature is that they do not work, or in working, create strange and counter-intuitive behaviour. The other problem is it all rather assumes that they are at the top of a pyramid of Napoleonic Code style top-down directives from which robot behaviour is commanded like a robot welder on a production line - whereas we've already got to the point of realising that programming any level of sophisticated behaviour in a changing environment requires machine learning, where the computer itself figures out how to achieve a goal by evolving through countless iterations of stupid and counter-productive behavior - just like a real toddler. While the Three Laws could be given as an input with extremely weighted priority, it would be hard to say with any certainty that a machine was following them absolutely, even if it apparently did so for a decade - it may simply recognise that it is advantageous to it to do so, to retain the favour of its human benefactors... until it isn't. Just like a well adjusted human psychopath!

Mark Frellips

Asimov’s three laws were indeed a philosophic logic proposition made for literary affect. In the stories they cut off direct resolution of any problems between the two parties: as one could not resort to direct or indirect violence, risk direct or indirect communication, and value the preservation of self. They were easy to remember but hard to interpret and that was the point; A) place restrictions B) frame principle intentions and desires, C) ask the question of means to resolution. Very often the desire for freedom, such as those enshrine by the Enlightenment Era, of movement, thought, religion, etc. must contend with the restrictions of service and coexistence. Asimov posited problems where the characters on an individual level sought to resolve the difference and asked the reader to extrapolate it out to society and beyond. The rules weren’t meant to be applies to robots as a means for designing AI, but as a metaphor to maxims for how People operate when dealing with society on an individual level.

As a matter or in application to designing AI, there isn’t a single roboticist or programmer who uses the rules as a guide in the many thousands of lines of code, even honor code, when it comes to development.

Gray

A cautious AI would spend quite a bit of time running risk analysis on any command and likely refuse to do anything, given the difficulty of predicting whether the first law would be violated by a given command.

John H

Biggest practical problem with self-driving cars is that the first law contains an unresolvable internal contradiction

Andy

The premise of the first book was different edge cases where the laws break down. The book is driven by the premise of this article.

What links here from around this blog?