One of my first jobs was as a nudity moderator for Vodafone. People would send in photos and videos and I'd have to manually classify whether they featured nudity or were otherwise unacceptable.

It was a bizarre job - one I've discussed before - but today, wouldn't we just throw an AI at it?

I recently read "How AI/ML algorithms see nudity in images - Comparison of image moderation APIs by Microsoft, Google, Amazon and, Clarifai" - a blog post by DataTurks.

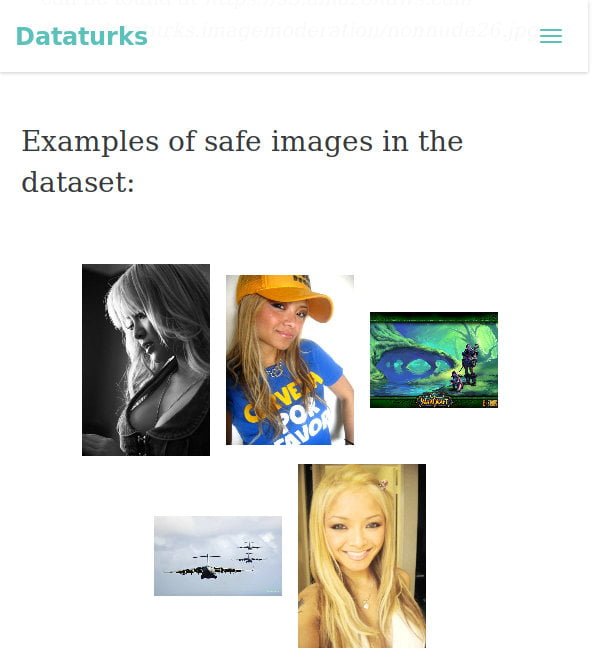

In it, they use a training set of images - a mix of "safe" and "nude" - to see how accurate various automated image moderators are.

I downloaded the dataset (I did this on my personal computer - not my work laptop!) It is a dreadful dataset and is an amazing example of how poor data can give misleading results.

Lack of data

There are 180 images in the set. 90 nude, 90 innocent. That's not enough images to do a proper comparison. Even if only one nude image is misclassified, that will dramatically change the reported accuracy of the system.

For a test like this, you need to compare hundreds of thousands of images otherwise small errors give a distorted impression of reliability.

Lack of diversity

If you look at the provided sample of "safe" images, you may notice something odd.

All the women have blonde hair and fair skin.

All the women have blonde hair and fair skin.

I'm not going to get into a discussion on ethnicity and the Fitzpatrick Scale - but out of the 90 nude images, I counted only 15 images featuring non-white women.

By comparison, there were around 31 blondes and 7 redheads. Does that sound representative of the world?

Lack of diversity

To quote Kryten:

The nude data set only features women. Male nudity is, apparently, not worth considering.

Perhaps that's why so many of my friends get unsolicited dick-pics? No one is training the data correctly?

Copyright and Consent

Who owns the copyright in the images? There's no provenance provided. While some of the photos are clearly professionally shot - others look like they are stolen selfies.

Do people know that their intimate photos are being used to train and evaluate machine learning models? Can they remove their consent from being part of this data set?

Source

This database of images is called Yet Another Computer Vision Index To Datasets (YACVID) - and was last updated in 2009.

I hope no one is using it for training an AI. But I'd also argue that it is unsuitable for evaluating AI performance as well.

One thought on “Nudity detection in AI - why diverse data sets matter”

Joy Buolamwini's Wikimania 2018 keynote presentation, "The Dangers of Supremely White Data and The Coded Gaze", is very pertinent:

https://www.youtube.com/watch?v=ZSJXKoD6mA8